Techniques now emerging from research labs will help make it affordable for next-generation autonomous vehicles to keep out of harm’s way.

There’s a dirty little secret associated with the autonomous vehicle (AV) prototypes now feeling their way along roads and byways: Many of them depend on expensive LiDAR light detection and ranging) sensors to detect and avoid objects in their path. One reason LiDAR units are expensive is that most commercial units scan the scene with lasers by means of rotating mirrors. The rotation mechanisms can be intricate and tough to mass produce economically. This is why early AV demonstrations used LiDAR units costing several times more than the cars on which they were installed. Prices have come down from the early days, but LiDAR having the range and resolution needed for vehicular use remains pricey.

Consequently, there is a quest to come up with ways of deploying vehicular LiDAR that eliminate the costly rotating mechanics. In this regard, several start-up firms have devised methods of scanning lasers across a field of view that are completely solid state. Their first target is to field a solid-state LiDAR going for below $500. Eventually they see LiDAR units costing half that figure.

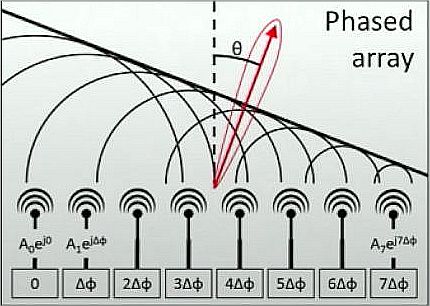

Two techniques in particular are under intense development: OPA (Optical Phase Array) technology is borrowed from phased-array radar where different phase-shifted versions of a single radiator get fed to an antenna array. Superposition arises when the signals are in-phase; cancellation takes place for out-of-phase signals. The second technique, FMCW (Frequency Modulated Continuous Wave) technology, differs from ordinary pulsed radar in that it continuously transmits a signal whose frequency changes linearly over time. The returning echos can be readily processed to not only provide the distance of the object creating the echo but also its relative velocity.

Both methods have their strengths. To understand the differences, it is useful to examine specific implementations now under development in research labs.

Why LiDAR and not radar?

A typical requirement for AV systems is that they discern two separate objects in close proximity, such as a construction worker and a bridge column, that are 200 m away. That kind of performance demands an AV system able to resolve features with 20-cm dimensions at that distance. In radar or LiDAR, the factor that limits resolution is the degree to which waves spread as they travel from the source to the target and back. It turns out that 60-GHz automotive radar can at best resolve objects having dimensions exceeding 5 m sitting 100 m from the car. Even at that resolution, the size of the radar source becomes impractically large. In contrast, lidar, operating at around 200 THz, (about 1,500 nm wavelength) can employ a source having dimensions on the order of 1.5 mm.

Thus resolution considerations favor the LiDAR approach. Additionally, the need to dissipate heat generated by the imaging system also favors the use of laser light rather than radar signals.

LiDAR systems generally scan a laser around the scene they digitize. The scanning mechanism is where much of the cost of existing systems arise. Solid-state LiDAR eliminates the mechanical scanning through three general approaches: flash LiDAR, MEMS-based mirrors, and optical phased arrays.

Flash LiDAR illuminates the entire field of view with a wide diverging laser beam in a single pulse—thus there is no need to scan the laser across the scene. Each pixel in a sensor array collects 3D location and intensity information from the returning reflections. Flash LiDAR is advantageous when when the camera, scene, or both are moving because it illuminates the entire scene instantaneously. A difficulty with the scheme is that it requires a powerful burst of laser light to reach the range necessary for automotive use. The wavelength used must be such that high levels do not damage human retinas. But inexpensive silicon imagers don’t work well in the eye-safe spectrum. So rather pricey gallium-arsenide imagers must be used instead.

MEMS systems use mirrored surfaces of silicon crystals to scan LiDAR lasers around a scene. Piezoelectric materials or electromagnetic fields are used to move the MEMS mirrors, eliminating the need for discrete mechanical parts. One difficulty with MEMS-based approaches is that the mirrors may need relatively large dimensions to illuminate scenes with enough laser light for the range automotive systems require. The resulting resonances can make MEMS mirrors susceptible to the shock and vibration frequencies that characterize vehicles. It may also be tough to keep the MEMS-reflected laser beam sufficiently focused to handle the ranges required for automotive needs. So aspheric lenses may be needed that add to costs.

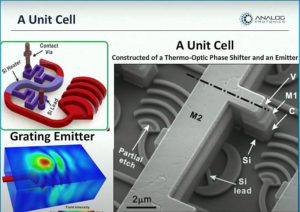

OPA LiDAR uses a series of nanophotonic antennas each fed from a phase shifter creating a delayed version of a signal from a single laser. The point of the phase delays is to create an interference pattern that effectively scans the laser across the field of view. Specifically, a typical OPA scheme would launch one undelayed laser beam, a second that is slightly delayed, a third that is delayed a bit more, and so on. The result is a wavefront that is launched at some angle to the array. The launch angle is varied by changing the amount of delay in the optical delay lines.

OPA uses principles first devised for phased-array radar. In phased-array radar, radar beams are steered by use of electronically controlled signal delay lines. The delay lines can be realized via varactor diodes

that change capacitance with voltage, nonlinear dielectrics such as barium strontium titanate, or ferro-electric materials such as yttrium iron garnet. In OPA LiDAR, the delay lines take the form of tunable phase shifters. The phase shifters are built from material whose index of refraction can be changed dynamically. (Changing the index of refraction changes the velocity of light through the material, thus implementing a delay line.) There are several ways of changing the index of refraction in the phase shifters. One widely used technique employs waveguide material whose index of refraction is sensitive to heat. OPAs may also use more exotic approaches to implement a delay such as cycling light waves through the waveguide multiple times.

Optical delay lines allow OPAs to scan a laser across a field of view in one plane. But OPAs can also implement scanning on multiple planes in the manner analogous to a raster pattern. There are various means of accomplishing this 2D scanning, but one straightforward approach is to change the frequency of the laser light for each swept plane. Another strategy is to simply stack multiple OPAs and use multiple laser beams.

One difficulty with OPA is that the approach typically entails significant computational complexity. OPAs with enough horsepower to handle automotive uses could include on the order of 10,000 phase shifters, each with its own control circuits. Power consumption can also be an issue if phase shifting employs thermal methods.

However, firms working on OPA designs say neither of these issues are show stoppers. One in this camp is Quanergy Systems, Inc. in Sunnyvale, Calif. Quanergy makes both mechanical LiDAR for mapping, security, and smart city and smart space applications, and OPA lidar for industrial uses and people-flow management. It also is developing OPA lidar for vehicular use.

Quanergy CTO Tianyue Yu says the firm’s OPA design uses thermo and electro-optical phase shifting. In its current commercial products, Quanergy’s OPA emitters scan left-to-right to form a 2D pattern in horizontal axis. Multiple OPA emitters stack together to comprise the 3D scan. Yu says Quanergy also has an OPA design in development where the beam can scan both left-to-right and top-to-bottom.

She further points out that the beam can be pointed at any angle within the field of view so the pattern traced out isn’t limited to a left-to-right sequential scan. This behavior makes possible a “dynamic zoom” feature unique to OPA LiDAR wherein the system can respond intelligently to focus in on areas within the field of view that merit a closer look. The “dynamic zoom” function allows higher resolution or a high frame rate in regions of interest based on situational awareness without ignoring other regions of the field of view. Also, there could be multiple regions of interest depending on the environment.

Moreover, it doesn’t take cutting-edge chip fab technology to realize such features. Yu says the OPA detector is fabbed in Singapore while TSMC in Taiwan handles the emitter. The detector IC occupies 1.5 cm2. It is characterized by feature dimensions in the tens-of-microns range. Yu also says the detector asic is not “super fast,” and all chip fabrication processes that go into the OPA are very mature.

Quanergy uses a laser operating at 905 nm (dubbed the near-infrared range) so the OPA can employ silicon-based light detectors and for compatibility with CMOS circuitry. Economics dictate operation at this wavelength: Some OPAs use 1,550-nm (short-wave infrared) wavelengths which require use of more expensive indium-gallium-arsenide (InGaAs) detectors. The advantage of the longer wavelength is a higher maximum permissible energy level while remaining eye safe.

Doppler LiDAR?

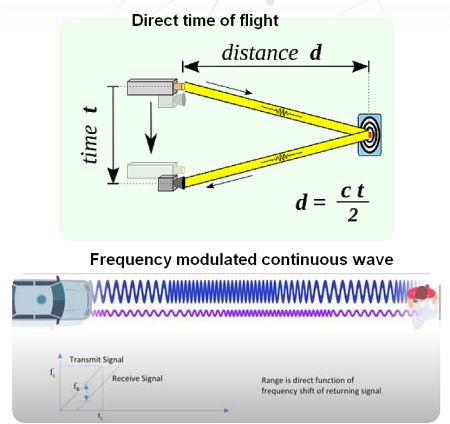

Most LiDAR, including Quanergy’s OPA units, use time-of-flight (ToF) to detect objects. All ToF sensors measure distances using the time elapsing for photons to travel from the sensor emitter to a target and back to the sensor receiver.

There are two types of ToF schemes, direct and indirect. Direct ToF sensors emit light pulses on the order of nanoseconds and measure the time elapsing for some of the emitted light to return. Indirect ToF sensors send out continuous, modulated light and measure the phase of the reflected light to calculate the distance.

The short light bursts that direct ToF systems employ typically minimizes the effect of ambient light and the effect of motion blur. The duty cycle of the illumination is also brief, leading to a low overall power dissipation. It is also possible to avoid interference from other pulsed ToF systems by judicious timing of the pulse bursts, perhaps via dynamic randomization of the pulse bursts. But because the pulse width of the transmitted light and the shutter must be the same, the system may need a precision measured in picoseconds. Similarly, illumination pulse widths are necessarily short with fast rising/falling edges (<1 nsec), putting a premium on a well-designed laser driver.

The light indirect ToF sensors generally emit is a single modulated frequency in the manner of a modulated carrier wave. The principle of operation uses the fact that the frequency of the wave determines the distance over which the emitted wave completes a full cycle. When an object reflects the modulated light, the sensor detects the shift in the phase of the returning light. Knowing the frequency of the emitted light, the phase shift of the returning light, and the speed of light allows the sensor to calculate the distance to the object.

The disadvantage of indirect ToF is the possibility of range ambiguity. Because the illumination signal has periodicity, any phase measurement will wrap around every 2π, thus leading to aliasing. For a system with only one modulation frequency, the aliasing distance will also be the maximum measurable distance. Multiple modulation frequencies can be used to solve the problem, with the true distance of an object determined when multiple phase measurements with different modulation frequencies agree on the estimated distance. This multiple modulation frequency scheme can also be useful in reducing multipath errors when the reflected light from an object hits another before returning to the sensor.

ToF LiDAR is known for its ability to figure out the distance to the objects it illuminates. However, it can also be used to figure out the velocity of the objects in its field of view by comparing object locations in subsequent frames. The requirement for synthesizing this velocity information is a super-precise time base.

There is another radar technique more specifically designed to yield velocity information. A few firms are applying the same technique to LiDAR, sometimes dubbing it 4D LiDAR because of the information it provides based on velocity. FMCW (Frequency-Modulated Continuous Wave) radar radiates a continuous wave but change its operating frequency during the measurement.

The point of the FM in FMCW is to generate a time reference for measuring the distance of stationary objects. The transmitted signal increases or decreases in frequency periodically. When the detector receives an echo signal, it compares the phase or frequency of the transmitted and the received signal. The comparison gives a distance measurement .because the frequency difference is proportional to the distance. If the reflecting object has a radial speed with respect to the receiving antenna, the speed gives the echo a Doppler frequency. The radar measures both the difference frequency caused by the distance and the additional Doppler frequency caused by the speed. The kind of frequency or phase modulation the radar uses generally depends on the velocities expected in the objects being imaged. The frequency deviation per unit of time basically determines the radar resolution.

A point to note is the FMCW approach is good at providing the instantaneous radial velocity (toward or away from the detector) of the objects it images. FMCW LiDAR is a relatively new technology; it is not clear how advantageous it may at sensing lateral (tangential or side-to-side) velocity as from cross traffic, pedestrians, and so forth.

Leave a Reply