Artificial Intelligence (AI), machine learning (ML), and deep learning (DL) are fast-changing and complex fields. Until relatively recently, there were no performance benchmarks for AI, ML, or DL systems. That is changing. Today, several industry organizations have developed AI, and ML benchmarks, with more complex benchmarks for DL coming soon. Those efforts stretch from the edge to the cloud.

The availability of benchmarks provides platforms that designers can use to compare various AI and ML approaches. Most initial AI and ML benchmarks have been fairly generic. The next generation of benchmarks for AI and ML are more specific to use cases from Edge devices to ADAS designs. That will provide designers with even better tools for comparing AI and ML solutions from different vendors.

Among the organizations focusing on AI and ML benchmarks are EEMBC, MLPerf, TCP, and AI-Benchmark. In addition, a new Silicon Integration Initiative white paper is focused on factors limiting the use of AI and ML in semiconductor electronic design automation.

Selected existing AI and ML benchmarks

AI-Benchmark was designed and developed by Andrey Ignatov with the Computer Vision Lab at ETH Zurich. This Edge AI benchmark consists of 46 AI and computer vision tests performed by neural networks running on a smartphone. It measures over 100 different aspects of AI performance, including speed, accuracy, and initialization time. The neural networks considered comprise a comprehensive range of architectures allowing users to assess the performance and limits of various approaches used to solve different AI tasks. A detailed description of the 14 benchmark sections is available here.

The EEMBC MLMark is an ML benchmark designed to measure the performance and accuracy of embedded inference. The motivation for developing this benchmark grew from the lack of standardization of the environment required for analyzing ML performance. MLMark is targeted at embedded developers and attempts to clarify the environment to facilitate not just performance analysis of today’s offerings, but tracking trends over time to improve new ML architectures. This benchmark focuses on three measurements deemed significant in the majority of commercial ML applications:

- Throughput – measured in inferences per second

- Latency – defined as single-inference processing time

- Accuracy – comparing network performance to human-annotated “ground truth”

Version 1.0 of the EEMBC MLMark includes source code (and libraries) for:

- Intel CPUs, GPUs, and neural compute sticks using OpenVINO

- NVIDIA GPUs using TensorRT

- Arm Cortex-A CPUs and Arm Mali family of GPUs using Neon technology and OpenCL, respectively.

The MLPerf consortium recently released results for the MLPerf Training x0.7, the third round of results from their ML training performance benchmark suite. The MLPerf Training benchmark suite measures the time it takes to train one of eight machine learning models to a standard quality target in tasks including image classification, recommendation, translation, and playing Go. This version of MLPerf includes two new benchmarks and one substantially revised benchmark as follows:

- BERT: Bi-directional Encoder Representation from Transformers (BERT) trained with Wikipedia is a leading-edge language model that is used extensively in natural language processing tasks. Given a text input, language models predict related words and are employed as a building block for translation, search, text understanding, answering questions, and generating text.

- DLRM: Deep Learning Recommendation Model (DLRM) trained with Criteo AI Lab’s Terabyte Click-Through-Rate (CTR) dataset is representative of a wide variety of commercial applications that touch the lives of nearly every individual on the planet. Common examples include a recommendation for online shopping, search results, and social media content ranking.

- Mini-Go: Reinforcement learning similar to Mini-Go from v0.5 and v0.6, but uses a full-size 19×19 Go board, which is more reflective of research.

AI and ML benchmarks under development

The Transaction Processing Performance Council (TPC) is working on an industry-standard AI benchmark that represents key AI use cases most relevant to the Server and Cloud industries. These use cases test algorithms reaching from ML to DL. Following the philosophy of the TPC, TPC-AI will model an end to end data management pipeline, including data generation, data cleansing, data manipulation, and data featurization. The TPC will allow publications and audit of TPC results based on available AI solutions.

The ULPMark benchmark line from EEMBC is now adding ML inference and developing a new benchmark, the ULPMark-ML. The effort to standardize what is known as “tinyML” or lower power ML is underway, with a dozen companies participating in EEMBC’s effort. The goal of this benchmark is to create a standardized suite of tasks that will measure a device’s inference energy-efficiency as a single figure of merit. This is expected to be useful from the cloud to the edge.

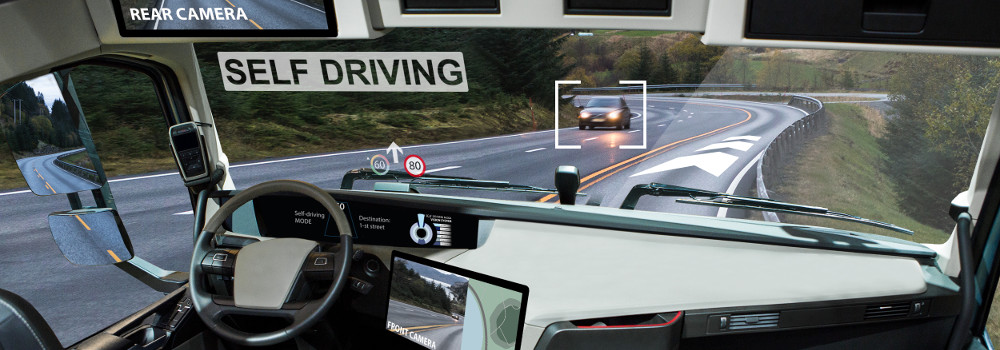

Next in line for development at EEMBC is an extension of the ADASMark benchmark suite to include ML capabilities. The ADASMark is a performance measurement and optimization tool for automotive companies building next-generation advanced driver-assistance systems (ADAS). Intended to analyze the performance of SoCs used in autonomous driving, ADASMark utilizes real-world workloads that represent highly parallel applications such as surround-view stitching, segmentation, and convolutional neural-net (CNN) traffic sign classification. The ADASMark benchmarks stress various forms of compute resources, such as the CPU, GPU, and hardware accelerators and thus allow the user to determine the optimal utilization of available compute resources.

And looking toward future developments in AI and ML, the Silicon Integration Initiative has issued a white paper that identifies a common data model as the most critical need to accelerate the use of AI and ML in semiconductor electronic design automation. The data model should serve the needs of chip developers, EDA tool developers, IP providers, and researchers.

The next generation of AI and ML benchmarks focusing on low power and automotive-specific applications foreshadows the next generation of energy-efficient AI and ML technologies. The increasing focus on energy-efficient AI and ML solutions will be the subject of the third and final article in this FAQ series on AI and ML technologies.

References

AI Benchmark, Computer Vision Lab at ETH Zurich

MLMark, EEMBC

MLPerf Training x0.7, MLPerf Consortium

ULPMark-ML, EEMBC

Leave a Reply