Now in its seventh year, Design World’s LEAP Awards showcase the best engineering innovations across several design categories. This undertaking wouldn’t be possible without the commitment and support of the engineering community.

Now in its seventh year, Design World’s LEAP Awards showcase the best engineering innovations across several design categories. This undertaking wouldn’t be possible without the commitment and support of the engineering community.

The editorial team assembles OEM design engineers and academics each year to create an independent judging panel. Below is their selection for this year’s LEAP Awards winners in the Embedded Computing category.

Congratulations to the winners of the LEAP Awards for Embedded Computing, who are profiled here.

Gold

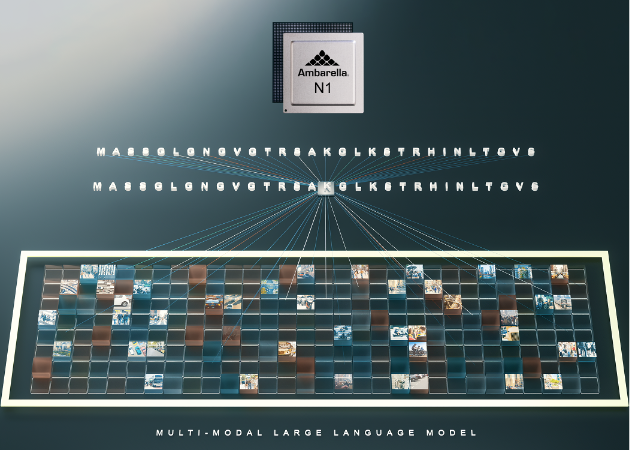

Ambarella’s powerful new N1 system-on-chip (SoC) series brings generative AI capabilities to edge-endpoint devices. This series is optimized for running multimodal large language models (LLMs) with up to 34 billion parameters in an extremely low-power footprint — without an internet connection. Multimodal LLMs run on the N1 at a fraction of the power-per-inference of leading GPUs and other AI accelerators. Specifically, the N1 is up to three times more power-efficient per generated token.

For example, the N1 runs Llama2-13B in single-streaming mode at under 50W. This, combined with the ease of integrating pre-trained and fine-tuned models, makes this SoC ideal for quickly helping OEMs deploy generative AI into any power-sensitive application.

Generative AI will be a step function for computer vision processing that brings context and scene understanding to various devices, from security cameras and autonomous robots to industrial applications. Examples of the on-device LLM and multimodal processing enabled by the N1 include smart contextual searches of security footage, robots controlled with natural-language commands, and different AI helpers that can perform anything from code generation to text and image generation.

Most of these examples rely heavily on camera and natural-language understanding and will benefit from on-device GenAI processing for speed, privacy, and lower total cost of ownership. Ambarella’s N1 SoC architecture is natively well-suited to processing video and AI simultaneously at very low power. Unlike a standalone AI accelerator, this full-function SoC enables the highly efficient processing of multimodal LLMs while still performing all system functions.

Silver

The EFR32MG26 Multiprotocol Wireless SoCs are the most future-proof wireless SoCs, ideal for mesh IoT wireless connectivity using Matter, OpenThread, and Zigbee protocols for smart home, lighting, and building automation products.

MG26 is part of an SoC family that includes key features like high-performance 2.4 GHz RF, low current consumption, an AI/ML hardware accelerator, and state-of-the-art Secure Vault™ security features that allow IoT device makers to create smart, robust, and energy-efficient products that are secure from remote and local cyber-attacks. The MG26 uses an ARM Cortex®-M33 running up to 78 MHz and up to 3 MB of Flash and 512 kB of RAM, enabling more complex applications and providing headroom for Matter over Thread. The MG26 is ideal for gateways and hubs, LED lighting, switches, sensors, locks, glass break detection, predictive maintenance, wake-word detection, and more.

Bronze

Synaptics

Synaptics

Astra AI Native Edge IoT Platform

The Synaptics AstraTM platform is an AI-native solution that addresses the needs of a new generation of smart IoT Edge devices when local, intelligent, private, and secure computation on data-gathering devices is increasingly critical. It combines the hardware, software, development tools, ecosystem support, and wireless connectivity to enable rapid development of AI-enabled Edge devices across various applications with optimum power, performance, and cost.

The hardware foundation is the SL-Series family of embedded AI-native embedded processors. The family provides the right computing and power consumption levels for a wide range of consumer, enterprise, and industrial Edge IoT applications. The multi-core Linux or Android systems on chip (SoCs) are based on Arm®Cortex®A-series CPUs and feature GPU, NPU, and DSP hardware accelerators for edge inferencing and multimedia processing on audio, video, vision, image, voice, and speech.

- The SL1680 is based on a quad-core Arm Cortex-A73 64-bit CPU, a 7.9 TOPS NPU, and a high-efficiency GPU.

- The SL1640 is based on a quad-core Arm Cortex-A55 processor, a 1.6+ TOPS NPU, and a GE9920 GPU.

- The SL1620 is based on a quad-core Arm Cortex-A55 CPU subsystem and a feature-rich GPU for advanced graphics and AI acceleration.

The SL-Series is supported by the Astra MachinaTM Foundation Series development kit that helps AI beginners and experts quickly unlock the platforms’ AI processing and matching Synaptics Wi-Fi® and Bluetooth® wireless connectivity capabilities.

Astra supports a robust Open Source AI framework and a thriving partner ecosystem.

Leave a Reply